The Turing Test (Part 1)

Related to the question of ‘what makes us, us?’, the possibility of computer minds is oft-explored in fiction and is a hotly-debated, complex area of interdisciplinary research. Could computers have minds, or think? And if not, what is the mark of the mental that distinguishes them from us? E. J. Lowe sets the scene nicely:

Our supposed rationality is one of the most prized possessions of human beings and is often alleged to be what distinguishes us most clearly from the rest of animal creation…indeed… there appear to be close links between having a capacity for conceptual thinking, being able to express one’s thoughts in language, and having an ability to engage in processes of reasoning. Even chimpanzees, the cleverest of non-human primates, seem at best to have severely restricted powers of practical reasoning and display no sign at all of engaging in the kind of theoretical reasoning which is the hallmark of human achievement in the sciences. However, the traditional idea that rationality is the exclusive preserve of human beings has recently come under pressure from two quite different quarters. On the one hand, the information technology revolution has led to ambitious pronouncements by researchers in the field of artificial intelligence, some of whom maintain that suitably programmed computers can literally be said to engage in processes of thought and reasoning. On the other hand, ironically enough, some empirical psychologists have begun to challenge our own human pretensions to be able to think rationally. We are thus left contemplating the strange proposition that machines of our devising may soon be deemed more rational than their human creators.[1]

There is considerable debate about what it would mean to say that a machine has a mind. But it’s clearly not an unimaginable proposition; we see lots of instances in sci-fi:

Here are two questions we might want to answer:

- What is the mark of the mental? I.e. what is it that distinguishes or defines a mind?

- How do we test for a mind?

There are lots of ways we might answer the first question: creativity, rationality, use of language, the ability to have feelings…

(If you’re interested, we can cover some of these in a future piece.)

As for the second, sci-fi frequently gives the same answer: the Turing Test.[2] But we’re getting ahead of ourselves…

A. M. Turing, mathematician and pioneer computer theorist, designed a test to decide whether a computer could think. A computer would pass the test if it could perfectly simulate a thinking person, that is, if anyone interacting with it would be fooled into thinking it was human. Turing spelled this out in terms of what he called ‘the imitation game’:

The “imitation game”… is played with three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart from the other two. The object of the game for the interrogator is to determine which of the other two is the man and which is the woman… The interrogator is allowed to put questions to A and B… In order that tones of voice may not help the interrogator, the answers should be written, or better still, typewritten. The ideal arrangement is to have a teleprinter communicating between the two rooms… We now ask the question, “What will happen when a machine takes the part of A in this game?” Will the interrogator decide wrongly as often when the game is played like this [between a machine and a human being] as he does when the game is played between a man and a woman? These questions replace our original, “Can machines think?”[3]

Or, as it’s explained in the film of the same name:

To see how this works, imagine you are confined to a room with a computer. On the screen are two chat windows, each showing your conversation with a different respondent. Using the computer, you can send and received typed messages to the two respondents. One of them is another ordinary human being (who speaks your language). The other is a computer program, designed to provide responses to your questions (perhaps a chat bot like Cleverbot).[4] You are allotted a period of time – let's say 10 minutes – during which you can send whatever questions you like to the two respondents.

Your task is to try to determine on the basis of their answers which is the human being. The computer passes the test if you can’t tell which is which (except by chance – the test is repeated to rule out luck).

"We are thus left contemplating the strange proposition that machines of our devising may soon be deemed more rational than their human creators."

Whether a computer or program can pass the Turing Test is an empirical question – that is, it can only be answered by observation (unlike many of the philosophical questions we’ve considered here, it’s not a logical question, answerable by reason alone). We won’t know until we try. And indeed, there is an annual Turing Test competition in which people enter their computer programs to compete alongside humans.

As of yet, no computer program has incontrovertibly passed the test, although sometimes they have been mistaken for humans (there is debate concerning the results, and what they mean). But that’s not to say that a computer couldn’t. And fiction recognises the possibility. We see a nice example of a Turing Test being conducted in the 2013 British Sci-Fi flick, The Machine (a film that is not at all represented by the first two sentences of its Wikipedia synopsis):

Later, the computer scientist conducts another Turing Test on a much more interesting candidate – a program that, like Cleverbot, learns from conversation (the scientist is the interrogator, and ‘green’ and ‘red’ the ‘A’ and ‘B’ from Turing’s imitation game):

Scientist: I’m going to start the Turing Test now. Green. Fugley Munter is a good name for a beautiful Hollywood actress; a teddy bear; or a wedding dress design?

Green: Teddy bear.

Scientist: Red. Describe love in three words.

Red: Home, happiness, reproduction.

Scientist: Green.

Green: Happiness, sadness, life.

Scientist: Green. Mary saw a puppy in a window. She wanted it. What did Mary want?

Green: The window.

Scientist: Why?

Green: Windows look out onto the world. They are pretty and help you feel less alone.

(An aside: what questions would you ask to tell the computer from the human being? Are there any questions you could ask that would assure you that something had a mind?[5])

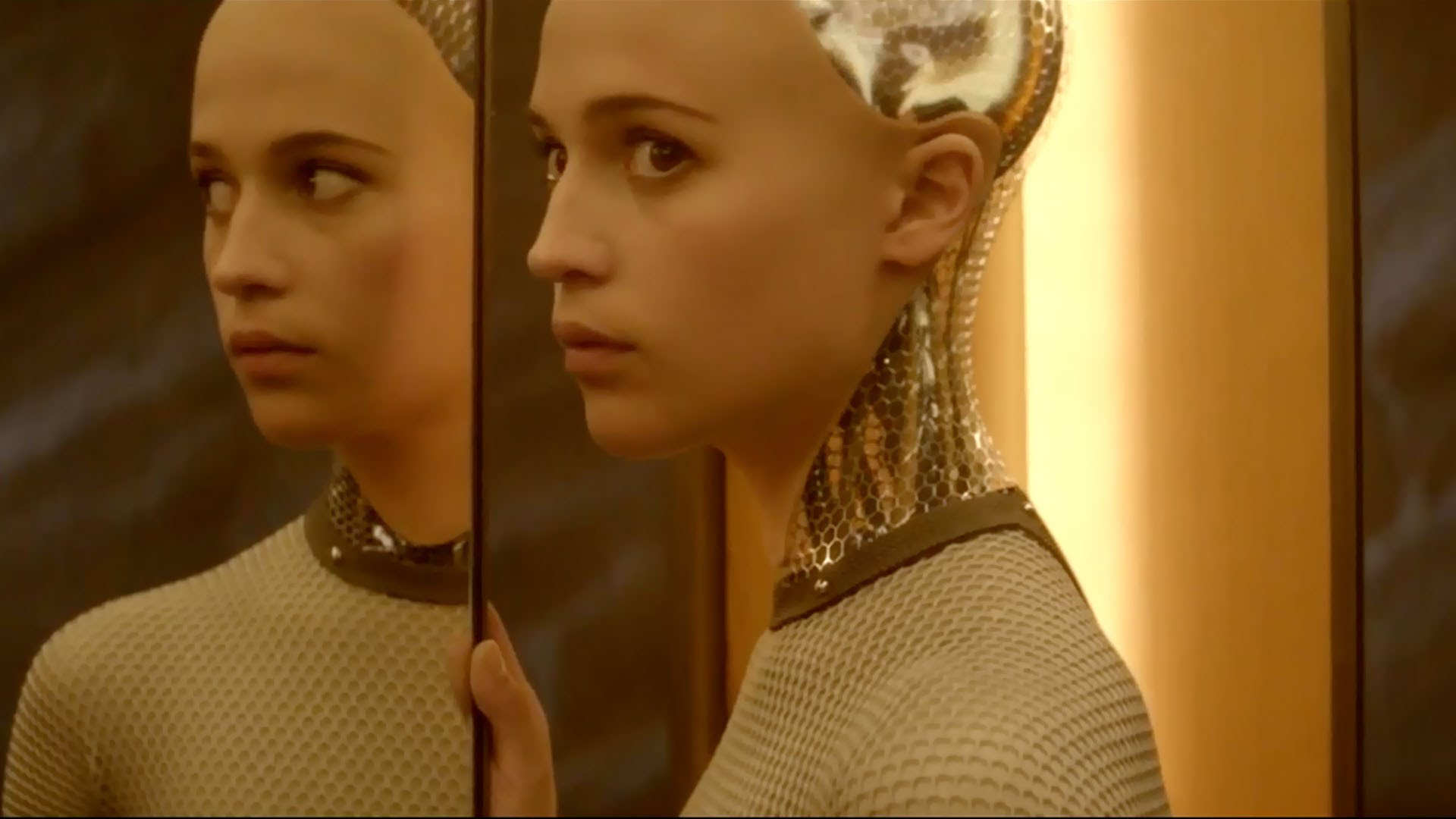

Green doesn’t pass the Turing Test, but it’s not hard to imagine a computer that would. In Westworld, Ford mentions that the hosts passed the Turing Test within the first year. C3PO, the synthetics from Alien, the cylons from Battlestar Galactica – all would pass the Turing Test with flying colours. Indeed, the creator of Ava from Ex Machina was so convinced she’d pass a Turing Test that he had the interrogator test her knowing that she was a machine:

Caleb: …in the Turing Test, the machine should be hidden from the examiner. And there’s a control, or –

Nathan: I think we’re past that. If I hid Ava from you, so you just heard her voice, she would pass for human. The real test is to show you she is a robot. Then see if you still feel she has consciousness.

After all, even if something passes the Turing Test, is that enough? Is the bar in the right place? Does passing the test necessarily mean you have a mind? Let us know your thoughts on twitter or via the comments, and we'll delve deeper into those questions in Part 2.

Footnotes

[1] E. J. Lowe, An Introduction to the Philosophy of Mind, (Cambridge: CUP, 2000) p. 193.

[2]Indeed, the Turing Test has become shorthand for any language-based test to distinguish humans from machines. We’ll go through some of our favourites in an upcoming piece.

[3]Alan Turing, Computing machinery and intelligence, Mind Vol. 59 No. 236 (1950), pp. 433-434.

[4]Speaking of chatbots, remember when Microsoft built a chatbot and released it into the wilds of twitter?

[5]A potentially worrying implication of this, of course, is that we mightn’t have a way of assuring ourselves that anyone else has a mind, human or machine. This is the problem of other minds.

References

- Lowe, E. J. An Introduction to the Philosophy of Mind, Cambridge: CUP, 2000.

- Turing, A. Computing machinery and intelligence, Mind Vol. 59 No. 236, 1950, pp. 433-461.

No comments

Start the conversation…